Ever wonder why some chatbots feel eerily human while others fall flat?

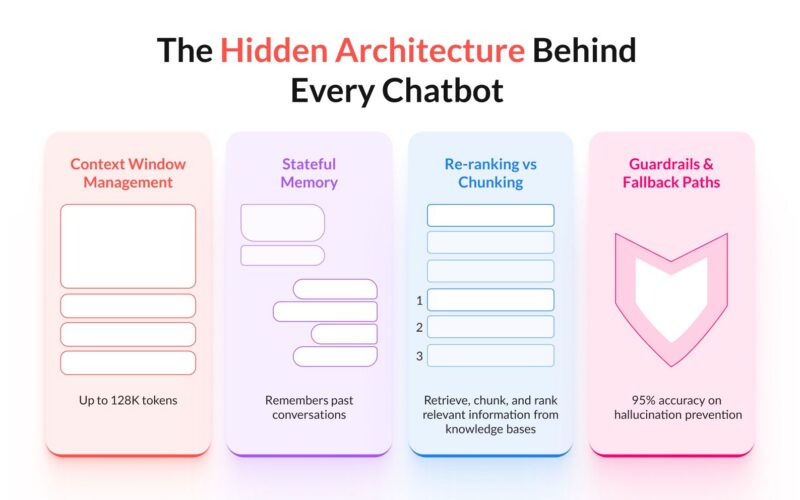

Working with AI chatbots made me realize that it comes down to four critical architectural elements:

1. Context Window Management

Good chatbots maintain coherence over extended conversations by balancing token efficiency with memory.

Modern LLMs now support up to 128K tokens (≈250 pages!) but face the “lost in the middle” problem when handling lengthy inputs.

2. Stateful Memory

This is what separates amateur bots from professionals. My team implements hybrid approaches:

-

Conversation Buffer Memory for recent exchanges

-

Conversation Summary Memory for older context

-

Secure databases for cross-session retention

3. Chunking vs. Re-ranking

For knowledge-intensive applications, how you process long-form content matters.

I’ve found semantic chunking paired with model-based re-ranking delivers the best balance of relevance and performance.

4. Guardrails / Fallback Paths

These are the core of reliable systems, and for this, we implement:

-

SLM detection engines (95% accuracy on hallucination prevention)

-

Confidence thresholds that trigger strategic fallbacks

-

Seamless human handoffs for complex queries

The magic happens when these components work in harmony. I’ve seen response quality improve by 40% when properly integrated.

Have you ever come across a chatbot that feels human? Comment down!